AI is everywhere. Literally. According to a Gallup survey, 85% of Americans use at least 1 of 6 devices with AI elements.[1] We’re talking Siri, Alexa, navigation apps (think Waze and Google Maps)—which, by the way, is the AI product US adults use most.[2]

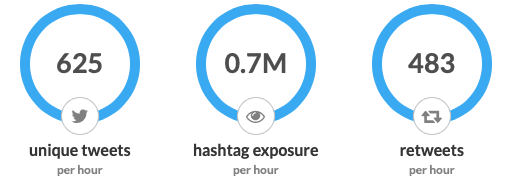

Within the past 24 hours, on Twitter, #AI has gotten 7 million views.

Image via RiteTag

We can’t help but be fascinated by it. C’mon, robots, driverless cars. This is the stuff of SciFi novels and movies, Star Wars (C-3PO and R2-D2?) and Blade Runner. But did you know we’ve been thinking and talking about AI since the 8thcentury? What about the Greeks creating the first ‘killer robot’?

We’re also not the first ones to have a muddled relationship with it: President Kennedy spoke of technology taking over jobs in the 1960s.[3]

However, AI can also do a lot of good: Pew Research listed connection across humanity, values-based system, and prioritizing people as top three AI solutions.[4]

But there’s more to unpack! Including what AI was like decades ago, who were fans of it (cough, cough, Da Vinci), and why knowing this history matters.

Homer, Da Vinci, and Descartes Were Fans of AI

Sure, AI as we know it, has only been around for a couple decades. But the thought of it dates back centuries.

While “artificial intelligence” wouldn’t be coined for hundreds of years (try 1955 by John McCarthy), we see reference of AI going as far back as the 8th century in Homer’s epic poem, Iliad, where Hephaestus (god of metalworking) created golden maidens—aka, robots; he also supposedly made “killer robot,” Talos.[5]

A few decades later Da Vinci, then later Reneé Descartes[6] also jumped on the AI bandwagon, or you could say helped start it. Da Vinci even invented a mechanical lion to entertain the King Francois I of France, which has since been replicated.[7]

John McCarthy Said It First

Jumping to 1955 in an academic conference at Dartmouth College, Computer Scientist, John McCarthy coins the term, “artificial intelligence.”[8]This is the first time we hear AI as the AI we know today.

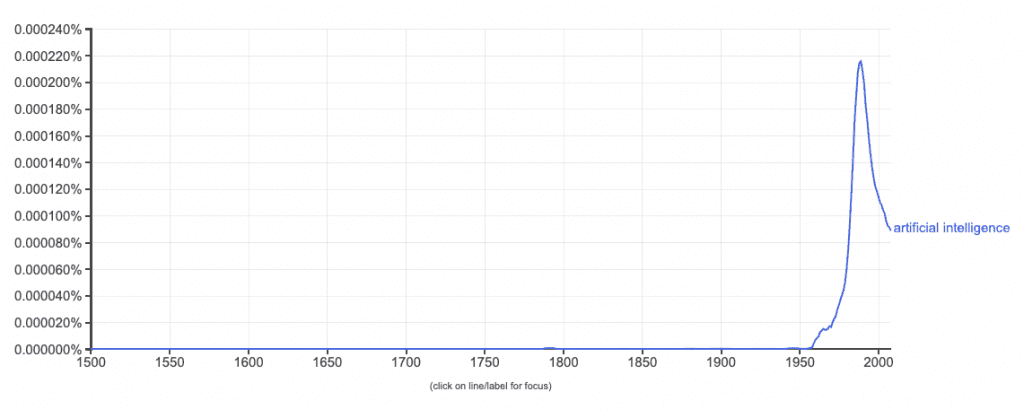

We can see this on Google’s Ngram Viewer, where the term “artificial intelligence” in English gets a bump in the 50s and 60s, then a huge spike in 1989, which curtails in 2007 but still goes strong. (X-axis goes from 1500 to 2008.)

AI Takes Off in the 50s

Before that, in 1950, British mathematician, Alan Turing comes up with the idea of whether a computer can communicate well enough that it could convince people it was human? Aka the Turing Test.[9]

While the early centuries were prime time for imagining AI, you can probably see by now the 1950s were the starting point, launching the first AI boom—which would continue in the 60s and tapering off in the late 70s when AI winter hits.

During this time, MIT’s Artificial Intelligence Lab is founded. Also, semantic networks for machine translation (at Cambridge) and self-learning software at IBM are in the works.[10]AI becomes this new frontier of opportunity and possibility (sound familiar?).

Then, in the 70s and 80s, the US government pulls back funding for AI (a lot)[11], and we enter an AI winter (not so good for computer scientists and researchers).

Those who don’t know, “AI winter” is a period where AI funding is dried up. Usually, public interest also drops off.[12]This won’t be the last time.

AI Gets Second Wind in the Late 80s

Thankfully, it doesn’t last for too long: a researcher in the late 80s creates expert systems,[13]rule-based machines intended to replace experts in fields,[14]which helps launch AI in its second boom.

Also, a lot of drummed-up fear of a large-scale Japanese project, which involves AI, hooks the US back to the AI appeal.[15]

It’s also worth noting that in 1986, researcher, Geoff Hinton co-authors a paper on black propagation, a learning algorithm that later becomes a cornerstone of deep learning.[16]

The 80s produces the connection machine (supercomputer) and one paper shifts the focus from creating human-esque robots to autonomous, non-human-like robots.[17]

Resurgence Again

Eventually, AI enthusiasm tapers off in the 90s as we enter our second winter. But, thanks to deep learning, a third AI boom starting early 2000s, the one we’re in now, takes place.[18]

Suddenly, the wealth of knowledge we’d built, neural networks in the 40s, blackprop in the 80s, combined with a wealth of data and significant computational power,[19]give way to Alexa, Siri, Waze, Tesla, you get the point.

AI’s in medical, in the process of assisting experts in reading x-rays better.[20]It’s even a part of art,[21]and has written a book.[22]

AI Now, And Where’s It Going

AI is finally available as a practical application that’s long been wanted—in fact, the US government had cut funding in the 70s, tired of waiting for AI’s accessibility.[23]Now that AI is here, businesses can’t wait to use it: According to Gartner, AI’s adoption in organizations tripled the past year, and it’s top priority for CIOs.[24]

While job loss is expected, especially in foodservice, truck driving, and clerical work, it’s not robot apocalypse (knock on wood):

“A widespread misconception is that AI systems, including advanced robotics and digital bots, will gradually replace humans in one industry after another,” Paul Daugherty says in his book, Human + Machine.[25]

AI, really, is meant to assist.[26]Not take over.

History shows us where we’re going by where we’ve been. AI is here because of the standardized and automated processes it’s built on from the past. Where will it go from here? Will there be another AI winter? We’re not sure. Studies show humans aren’t that great at predictions.[27]But it’s still fun to guess.

Are you interested in getting started learning about AI? The following resources will get you there: best machine learning courses, python online courses, top computer science classes and java courses.

What do you think? Comment below!

References

[1]Gallup: Most Americans Already Using Artificial Intelligence Products

[2]Gallup: Most Americans

[3]Technology’s Stories: Broken Promises & Empty Threats: The Evolution of AI in the USA, 1956-1996

[4]Pew Research: Artificial Intelligence and the Future of Humans

[5]Nature: Ancient Dreams of Intelligent Machines: 3,000 Years of Robots

[6]Nature: Ancient Dreams

[7]Reuters: Da Vinci’s Lion Prowls Again After 500 years

[8]Stanford:Stanford’s John McCarthy, Seminal Figure of Artificial Intelligence, Dies at 84

[9]McKinsey: Artificial Intelligence The Next Digital Frontier?

[10]McKinsey: Artificial Intelligence

[11]McKinsey: Artificial Intelligence

[13]Technology’s Stories: Broken Promises

[14]Moral Robots: What Are Expert Systems?

[15]Technology’s Stories: Broken Promises

[16]Wired: The Godfather’s of the AI Boom Win Computing’s Highest Honor

[17]New York Times: Building Smarter Machines

[18]Technology’s Stories: Broken Promises

[19]Fortune:From 2016: Why Deep Learning Is Suddenly Changing Your Life

[20]NIH: National Experts Chart Roadmap for AI in Medical Imaging

[21]Hyperallergic: Using AI to Produce “Impossible” Tulips

[22]The Verge: The First AI-Generated Textbook Shows What Robot Writers Are Actually Good At

[23]McKinsey: Artificial Intelligence

[24]Gartner: Gartner Predicts the Future of AI Technologies

[25]Human + Machine: Reimagining Work in the Age of AI by Paul Daugherty

[26]Human + Machine

[27]Medium: Prophecy and Politics, or What are the Uses of the “Fourth Industrial Revolutions”?